Yes, you can create voice controlled WebApps with DataFlex, and here’s how…

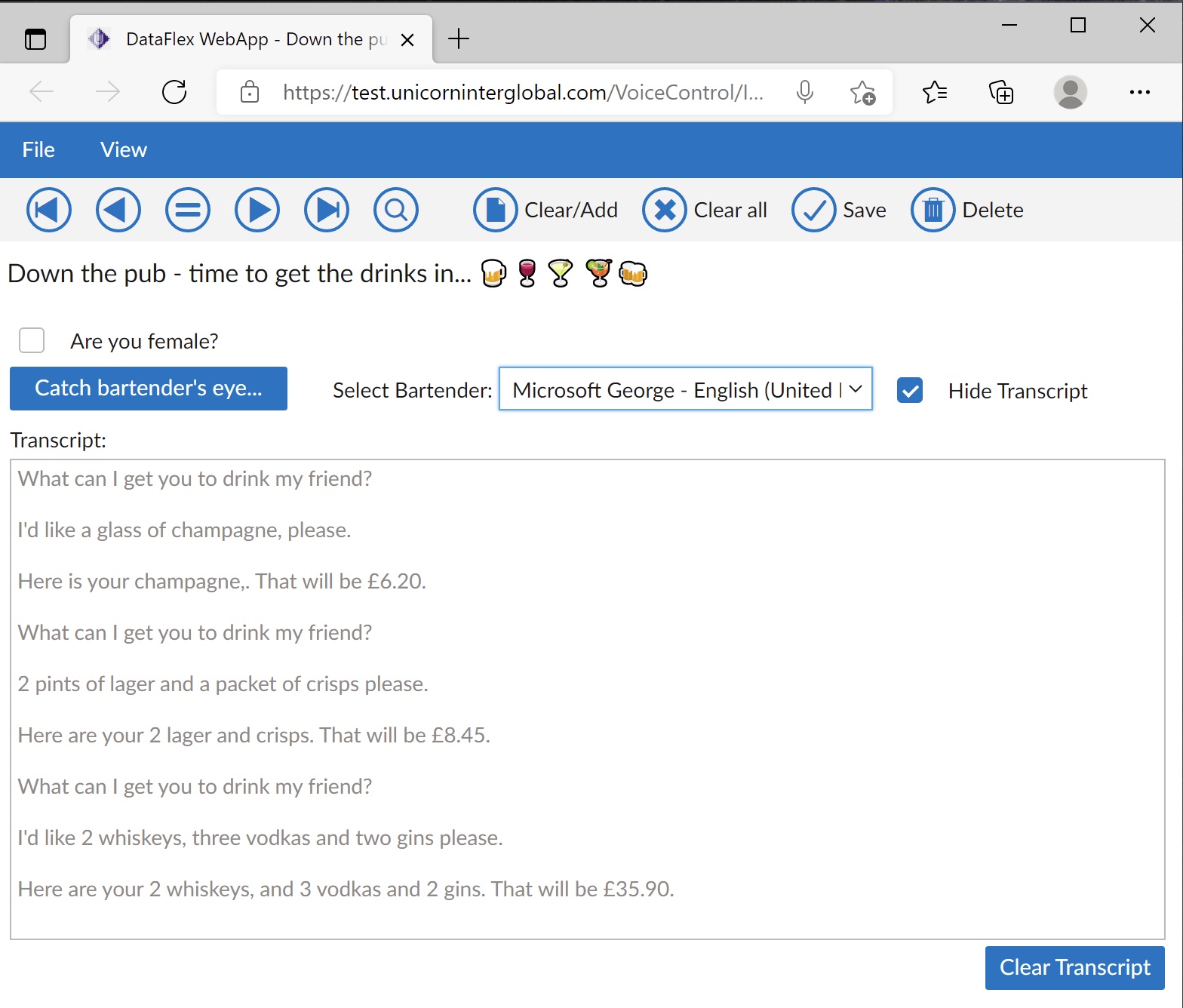

You can try out the sample view shown above here.

Inspired by Johan Broddfelt’s recent pair of excellent “Discovering DataFlex” YouTube videos – Search using your voice and Let your application speak – I thought I’d create a pair of DataFlex framework components to provide those capabilities (Johan does this in his videos, but in the specific context of his demonstration app – I wanted something more generic).

This is all done in DataFlex 2021 (so v20.0) – the nifty set of drinks symbols in the view caption shown above being a not-so-subtle give-away that Unicode is involved here. ?

The Components for voice controlled WebApps

The bit that listens

The speech recognition components – cWebVoiceControl and its client-side partner, WebVoiceControl.js – are based on the cWebBaseControl/WebBaseControl abstract class provided by the framework. It has no visual aspect, so the only things I had to do was provide it with a set of properties (quite extensive, as it turns out) and a single method: startListening(). (The actual object supports other methods: stop() and abort(), but I didn’t mess with those.)

In that startListening method, it first instantiates a SpeechRecognition object. Johan checked two different APIs for this: window.SpeechRecognition and window.webkitSpeechRecognition, using the first one found to be defined (a clever JavaScript trick via its “or” operator: “||”), however I found that the browsers I have so far identified as supporting this – Google Chrome, Microsoft Edge, Safari and Brave (this last to a non-working extent!) – all used the latter. It sets that’s properties from those of the enclosing framework component, then adds functions to capture the various events that such an object can respond to; in general each of these will check if the appropriate pbServer{Event} property is set true in the component and if it is, will call the component’s “serverAction” method, passing it the appropriate server-side message.

The bit that speaks

The voice synthesis components – cWebSpeechControl and the client-side WebSpeedControl.js – are again based on the WebBaseControl abstract class. Again, there was no requirement for a visible aspect, so all that was initially required was a speak() method, which creates a SpeechSynthesisUtterance object with the passed text, sets some properties on it, then calls window.speechSynthesis.speak() with it. However in order to provide a range of “voices” for it I found I needed to implement a getVoices() method as well, which sends back a list of those to the server. Interestingly, in most of the browsers I’ve tried it in, this fails the first time (specifically, the method: window.speechSynthesis.getVoices().forEach does nothing first time through – if you can explain why, maybe let me know!), but works thereafter. (Note: this is why you usually have to try a couple of times to “Catch bartender’s eye” – but that just adds to the authentic bar-room experience, right? ?)

The bit in between

Although some of the logic for controlling the flow of the conversation is in the view itself, I found the parsing of the user’s response into structured data really required its own component. The cOrderParser class is, in a way, a very dumb AI (it is so stupid it thinks “a gin and tonic” is two different drinks – SkyNet, Jarvis and Ultron need not look to their laurels anytime soon! ?). You might not have to be super-smart to work behind a bar, but I still wouldn’t trust this guy with the job!

On creation (so in its Construct_Object procedure) it builds a pair of arrays – the names of things you can order and matching prices – and stores those in properties. When an order is received from the speech recognition component (oListener – a cWebVoiceControl object) that is passed to it as a string. It breaks that lowercased string into words (StrSplitToArray()) then runs through those looking for quantities (whether in words: “two”, or numbers: “2”) and things in its list (there is a bit of messing around to fix up things that often seem like they should work but don’t: plurals; stuff like “brandies” being interpreted by SpeechRecognition as “Brandy’s”; if it spots “wine”, was the previous word “red” or “white”?; and so forth). Out of all that it assembles an array of items, their quantities, prices and item totals and then returns that.

Other bits

There are a few other wrinkles: If you indicate you are female, English (UK) bartenders will addrress you as “madam” rather than “sir“, while Ozzies use “sheila” and “mate“; to US bartenders we are all “my friend“, while to those ever-polite Canadians (if you can find one!) we are “my good fellow/lady“. (Hint: Microsoft Edge on Mac has an excellent selection of such “voices”.)

If you live in one of the countries I’ve covered, you should get charged in local currency: £ and p sterling, US $ and ¢, or Euro-zone €, or even ₹ (Indian rupees and paise in case you didn’t recognise the symbol ?). This is done using a combination of the OnInitializeLocales procedure of the cWebApp object, an object of a little class called cLocalInfo and the Accept-Language HTTP header your browser passes with its initial GET request for the page. To experiment, you can change this:

- In Chrome it is under Settings -> Advanced -> Language -> Add languages (down at the bottom) – select one then use its three-dots menu to select “Move to top”

- In Edge it is again under Settings -> Hamburger menu -> Languages -> Add languages button – select one and use its three-dots menu to select “Move to the top”

- In Safari I’m afraid I have no clue – an excercise for the reader, perhaps ?

You will need to refresh the app in the browser for the change to take effect (because that’s what calls OnInitializeLocales).

Source

The source code involved can be found on GitHub at https://github.com/DataFlexCode/VoiceControl.